Let’s consider Dave Wichers and the OWASP Top 10 project resists all the pressure and the 2017 edition of OWASP Top 10 will include the new A7 “Insufficient Attack Protection”. Lately the discussion has turned more constructive so maybe that prospect is not all that unrealistic. But honestly, I can not tell if A7 will stand or not.

I clashed with James Kettle (@albinowax) on the OWASP Top10 mailinglist after his blog post criticising A7 (and the way it made it into the list). Meanwhile James and I had a more civilised exchange on the merits and dangers of Web Application Firewalls on production systems.

Let’s continue the constructive exchange on A7 and contribute some additional information that is loosely linked to pen-testing systems protected by a WAF.

One argument I frequently hear is that a well configured WAF (admittedly a rare occurrence) makes it harder to perform penetration tests and to discover vulnerabilities in bug bounty campaigns. Overall security would suffer because the white hats face more resistance.

I do not really buy into this argument, but condescension won’t make it go away. So what is the problem and how can you make sure the white hats don’t lose their edge?

The structural problem is of course, that penetration testers and bounty hunters approach systems as if they were black hats; namely in the widespread form of black box tests. Penetration testers look through the eyes of the attackers and it is the number one argument you hear if you consider hiring one of them. So whatever we do to fend off the criminals has a chance to these good guys.

Solving problems in the source code is the golden path to fixing vulnerabilities. I won’t argue with that. But if the last twenty years of web application security has taught us one lesson, then it is that fixing the code is very hard. And if you have ever tried to convince an unwilling developer to set a security flag on a cookie (a secure flag of all the things!), then you will probably realize that you need additional means to secure your systems outside of fixing the code.

I am an outspoken advocate of security in depth. I think we need multiple layers of security stacked behind each other (and between systems to thwart lateral movement). We can never know if any security measure will fend off 100% of the attacks, but multiple 90% solutions can be combined to bring a decent level of security.

A well configured Web Application Firewall is such an additional layer of security. As the author of the 2nd edition of the ModSecurity Handbook, I favor that choice of course. After all, ModSecurity is the most popular WAF and the only really Open Source offering out there. But I won’t rule out the chance that some of the commercial products boosting artificial intelligence, smart autolearning and full cloud integration are more than marketing buzz. 😉

ModSecurity is a WAF engine. It is usually combined with the OWASP ModSecurity Core Rule Set that empowers the WAF. CRS which saw a major 3.0 release last Winter, hence CRS3. You might have seen the release poster back in the day.

So let’s say you are a pen tester and you face a well-tuned CRS3 installation. Launching your security scanner and return the next day to put your logo on the report will not do the trick. It will probably fail after the first few requests and your report will have to be put to the bin.

You need to identify and disable the WAF first.

Identifying and fingerprinting a WAF is very easy. You just need to make sure you do first or you loose a lot of time. Let’s take ModSecurity / CRS3 as an example and look at the fingerprinting options for the configuration settings.

I wrote a blog post about this last year, but it’s a bit outdated since we changed the rules a bit with the release of CRS3.

Let me just look at the theory of this and somebody can then go and get the details sorted out. A standard plugin for the big web vulnerability scanners sounds like a great idea.

A Web Application Firewall examines the traffic and tries to identify attacks. It also tries to optimize performance and minimize false positives. That’s why it will only trigger

alerts when it really thinks there is something amiss.

It is clear that there are many decisions to make and many compromises to take in this game and it is also obvious that different products will take different decisions hence

simplifying the fingerprinting.

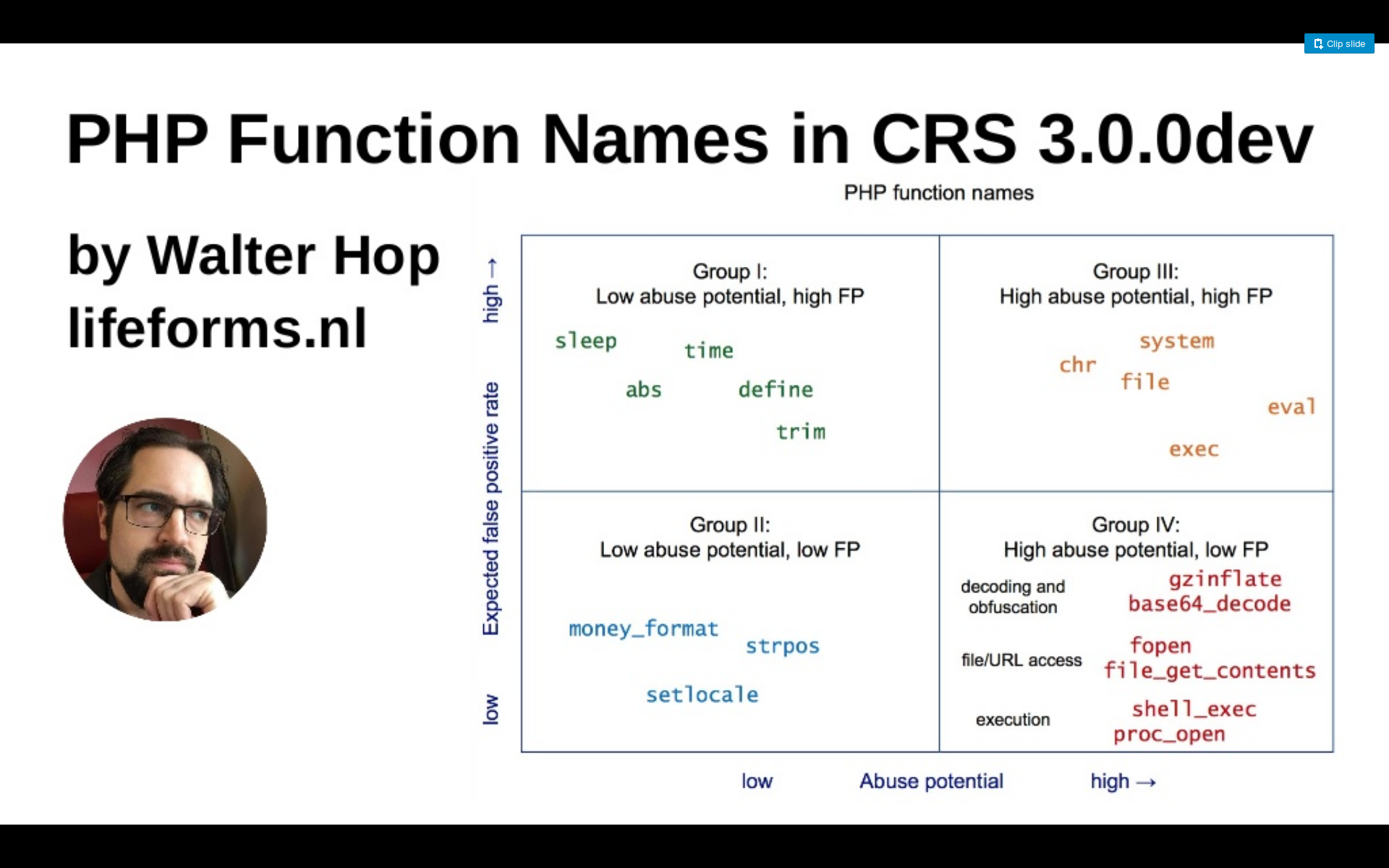

Let’s look at the concept behind the CRS3 PHP injection rules to see where the CRS project faced such decisions. The following is a slide from a presentation at the Area41 conference in June 2016.

So Walter Hop divided the PHP functions in four groups. All four groups are handled differently in the rule set depending on their danger and the likeliness to provoke a false positive.

CRS3 features so called Paranoia Levels. The higher the Paranoia Level, the more aggressive and insane the rules. This means more security, but also more false positives that need to be handled and that will eat resources of the system administrator.

What we did with the rules is that we mapped the PHP function names on the paranoia levels and you can now play around with them and detect the paranoia level of a CRS3 installation quite easily.

CRS3 runs in scoring mode by default. A request is blocked at a score of 5 which equals a single critical failure.

An operator can raise this anomaly limit at his discretion. This is especially useful during the integration phase, when you are not yet sure how real world traffic is affected by the WAF. You would then need to submit a payload multiple times within the same request to have the respective rule trigger multiple times and hit the higher anomaly threshold.

Most rules are considered critical, but there are also notice and warning level alerts that give you lower scores points. If you combine them, you have granular control over the anomaly score you are raising. So by using different payloads and issuing them multiple times, you can steer your anomaly score in a very exact way. This allows you to determine the anomaly threshold and paranoia level setting on the ModSecurity / CRS3 WAF.

A WAF is only a deterrent. It has been shown time and time again that every WAF can be evaded. And people have managed to bypass CRS3 at PL4 (which cost me a cake, lately). But it will cost an attacker time and time is always limited no matter if you are a defender or an attacker. A WAF will encumber the attackers. It eats into their time and hopefully, they look for an easier target. This does not work if the attacker is really coming after you and nobody else, but you would not remove a 2nd lock on your door just because a hitman is trying to catch you. Physical security tells us that no lock and no bank safe is impenetrable. Safes are sold with information on how long they will typically withstand an attacker. I think WAFs and the paranoia levels need the same labels.

But let’s put the philosophy aside and assume you have identified the WAF correctly and you want to continue your tests without having to try and bypass the WAF by hand.

There is one advantage that a penetration tester has over a black hat. He can call the system administrators and tell them to switch off the protection so he can actually test the application and not the WAF. Bounty hunters are in a less comfortable position and I think more thinking needs to go into allowing bug bounty programs in the light of A7. Osama Elnaggar wrote in with a proposal to have the big bounty hunter sites advertise VPNs for their users to allow bounty hunters to access their target services from a shared IP address. This blurs liability (and monitoring!), but it could help bounty hunting campaigns.

But how do you disable the WAF for an individual pen-tester in a sensitive way?

You have multiple options. Let me show you two or three techniques that work well with CRS3.

Variant 1: Disable ModSecurity for a given client IP

Assuming the client is clean and IP forging does not work over the internet, this is a fairly good way to give an individual client a backdoor.

SecRule REMOTE_ADDR “@ipMatch 127.0.0.1” \

“id:1000,phase:1,pass,log,msg:’Disabling rule engine’,ctl:ruleEngine=off”

If you are smart, you have a way to make this go away after a certain amount of time again. Think of an automatic rollback of the change, or include a chained rule that checks the time.

SecRule REMOTE_ADDR "@ipMatch 127.0.0.1" \ "id:1000,phase:1,pass,log,msg:'Disabling rule engine',chain" SecRule TIME "@lt 20170601000000" "ctl:ruleEngine=off"

The ModSecurity rule language is rather hard to grasp, so if this is new to you, give it some time to really understand it. Notice how the ctl statement is linked to the second SecRule command, that has been chained to the first rule, while the msg is written together with the first rule but only executed once the second rule hits. It’s all a bit black magic, but the ModSecurity handbook does a fairly good job in explaining this – or check out the tutorials here at netnea.com to get a kick start.

Variant 2: Raise Anomaly Threshold for a given client IP

This has the advantage that you see all alerts the rule set triggers during the test even if the requests are no longer blocked. The disadvantage is that ModSecurity will write all alerts and fill your logs. So use with caution when working with a security scanner.

I have seen requests score over 1000. So if you raise, it better be very high.

SecRule REMOTE_ADDR "@ipMatch 127.0.0.1" \

"id:1000,phase:1,pass,log,msg:'Raising anomaly limit',chain"

SecRule TIME "@lt 20170601000000" \

"setvar:tx.inbound_anomaly_score_threshold=10000,\

setvar:tx.outbound_anomaly_score_threshold=10000"

Variant 3: Disable ModSecurity for a given client certificate.

Basing a security decision on authentication is tricky to get right. The situation is a bit different with client certificates as they are not part of the HTTP request, but part of the SSL/TLS handshake prior to evaluation of the HTTP payload. This means, that authentication is over the time ModSecurity starts (prior to phase 1 in ModSecurity speak). Given that client certificates are also harder to steal than passwords, disabling ModSecurity (or raising the scoring limit) for a given client certificate seems viable. We’ll identify the cert via the serial number, but you can use any information you like.

However, it takes some acrobatics to get this right. The main problem is that ModSecurity can not directly access the trove of information that mod_ssl shares with the webserver. We need to transfer the information into an environment variable first. Usually you do this via SetEnvIf (or SetEnv for that matter), but it does not have access either to SSL/TLS information either: ModRewrite to the rescue.

Now ModRewrite runs fairly late into the request lifecyle. When you look at it from a ModSecurity perspective, ModRewrite happens between phase 1 and phase 2. This means we can not disable the ModSecurity engine before phase 1 runs. But we can stop it before phase 2 runs and thus before the evaluation of the anomaly score happens.

I have not tried this out in a production setup and I think it looks rather brittle. But it’s the best I could come up with in this regard.

CAVEAT: This assumes client cert authentication is properly implemented and the use of a specific CA is enforced. Run this on an optional client cert configuration and you have built yourself a public backdoor.

RewriteEngine On

RewriteOptions InheritDownBefore

RewriteCond %{SSL:SSL_CLIENT_M_SERIAL} "(.*)"

RewriteRule / - [ENV=MY_CLIENT_CERT_SERIAL:%1]

SecRule ENV:MY_CLIENT_CERT_SERIAL "@unconditionalMatch" \

"id:1000,phase:2,pass,log,msg:'Client cert serial identified: %{MATCHED_VAR}'"

SecRule ENV:MY_CLIENT_CERT_SERIAL "^1337$" \

"id:1001,phase:2,pass,log,msg:'Disabling rule engine',chain"

SecRule TIME "@lt 20170525000000" "ctl:ruleEngine=off"

Obviously, you can also raise the anomaly threshold instead of disabling the rule engine completely.

TL;DR; Let me sum up this whole blog post. We have established the fact that Pen-Testers are affected by people trying to cover for OWASP Top Ten’s potential new A7 item (Insufficient Attack Protection) or by Web Application Firewalls in general. I have then explained a technique to fingerprint a WAF – ModSecurity with the Core Rule Set 3.0 in our example – quickly and then laid down a few configuration examples to give a penetration tester a way to bypass the ModSecurity.

[EDIT: Added Osama Elnaggar’s idea to provide VPNs for bounty hunters.]

Christian Folini Follow @ChrFolini Tweet

Christian Folini Follow @ChrFolini Tweet